kw: book reviews, nonfiction, taxidermy, biographies

Quick quiz to start things off: You have a choice of artifacts to purchase and take home, perhaps to display in your den. One is amounted pair of fox kits in an attitude of play, the other is a jackalope. Assuming prices are similar (which is unlikely), which would you rather have? Based on sales figures just at Wall Drug in South Dakota, which sells 1,200 mounted jackalopes each year, the odds are about a thousand to one in favor of that horned hare! The last time I was at Wall Drug, I was told the most popular version of the jackalope has antelope antlers rather than the young deer antlers shown here, plus pheasant wings.

That's a lot of jackrabbits that had to die to satisfy a whimsy. But tell me, is the mounted pair of fox kits any less whimsical? It is more "authentic", because it depicts something real. But this is not a hunting trophy, like that stag's head on the wall of the neighborhood sports hunter. I sure hope the kits weren't killed on purpose just to make this display. But museum hunters still kill many animals just for the purpose of mounting them for display in dioramas or other museum exhibits. Even the tiny local Natural History Museum has a couple dozen rather large taxidermized animals (lion, wildebeest and similar critters) mounted and on display.

The Authentic Animal: Inside the Odd and Obsessive World of Taxidermy by Dave Madden is part biography and part natural history, the natural history of that interesting human variant, taxidermists. The biographical part concerns Carl Akeley, who has been called the father of modern taxidermy, even though nearly every technique he promoted had been invented by others, sometimes a century prior. The author devotes about half the book's text to biographical material and the other half to his own rather obsessive (as he admits) pursuit of things taxidermic. He interviewed a number of them, and attended several competitive exhibits, where judges examine every hair or feather, every skin fold, of a mount for perfection of technique, as well as the overall composition and arrangement.

At one such show, Madden was admiring a lovely wood duck on a pedestal, when suddenly it moved! A man standing nearby said, "He's a good duck." Quiet and unflappable, this living duck happened to be well practiced in stillness, a necessary skill for all prey animals. At a taxidermy show, this pet duck was remarkable, being the only living animal present.

You know the old joke: If you hear "clippity clop" hoofbeats outside your window, you don't automatically think "Zebra!" Well, unless you are on safari in the Okavango Delta. There, it is the horse that is unusual.

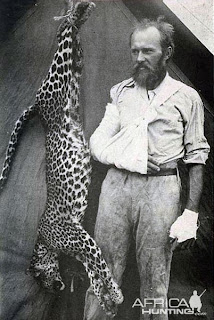

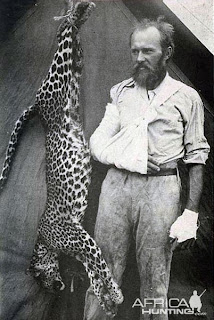

Carl Akeley became something of a legend in his own time, in part by killing a leopard bare-handed once he ran out of ammunition (he missed it at least five times). It attacked him, going for the throat, and he got his right arm up and into its jaws, then punched it to death, breaking ribs and finally puncturing the lungs. As you can see, this was a young animal, a juvenile. A stronger adult animal would have been able to bite through the arm bones and finish Akeley off rather quickly.

This was on Akeley's first visit to Africa, once he became an established taxidermist at the American Museum of Natural History in about 1896. He visited Africa five times, dying there of dysentery in 1926.

As the story goes, when he was a boy, the pet bird of a family friend died, and young Clarence (who preferred Carl in later life), learning from books, skinned it and mounted the skin in a pretty lifelike pose. It is not clear how much the friend appreciated the gesture.

As a young man, Carl worked at Ward's, a commercial taxidermy house that catered to rich clients. It was a bit of a sweat shop, and Carl's preference for getting the details right made him slower than the others. It led to his firing after a few years. Yet that same eye for detail, and a few innovations in preparing lighter-weight manikins, led to museum jobs and growing fame.

But I find most interesting the author's travels in the world of taxidermists. He never tried to mount an animal skin himself. He studied those who do, learning what makes them tick. Most are hunters, but as time passes, most taxidermists turn their skills to mounting others' kills, either as favors or as a business, or to win awards. At one time, taxidermists made their own manikins, carved from soft wood or foam, or molded in hollow plaster or fiberglass. Now you can buy a dozen sizes of prepared manikin for the animal of your choice, in most cases. You can get a deer manikin of your chosen size with four neck positions, from erect to full sneak (almost feeding position). With luck, the skin can be mounted forthwith. One may need to do a bit of carving here and padding there. The artistry that remains is all in the plumping of lips and arrangement of eyelids about glass eyes, and a few other details here and there.

It kinda reminds me of the way ham radio has changed since I was licensed in the 1980s. You could at that time still buy parts or kits and make a radio, or even scrounge at a surplus store and really go "homebrew". A very few hams still build, but most are "appliance operators". When you can buy a transmitter for $250 that is better than anything you are likely to build, why go to the effort? Most hobbies are the same, and both hobby and commercial taxidermy are almost wholly dependent on the vendors. Basically, you start with a dead animal, skin it, then use one of several commercial flensing machines to strip inner flesh from the pelt, leaving, for a squirrel-to-deer-sized animal, no more than a millimeter or two thickness. After that, you use various softening compounds and adhesives, and commercial arsenical or insecticidal soap, a commercial manikin, glass eyes chosen from quite an impressive selection, and perhaps even a photo-mural diorama out of the catalog. It's getting harder to find areas in which to display one's artistry.

There is a long chapter on why we kill animals, anyway, besides the need to eat some of them. Bragging rights is one big motivator (and I find the impulse understandable but nauseating). I suppose I must, grudgingly, admit the museum's need to educate the public with properly-mounted specimens of animals most people would never see. But programs like PBS's

Nature, and series like

Wild Kingdom elsewhere, let people see the animals in motion, albeit in heavily-edited footage that doesn't show much of the boring stuff (it takes a lion or leopard a couple days to sleep off a good meal).

There is also a chapter about human taxidermy and similar efforts. There was only one real taxidermist of humans, Edward Gein, a person who was clearly deranged; most of his makings were fetish objects. In a house filled with skulls he had lampshades and chairs of tanned human skin, and lots and lots of "unmentionables". At least Gein didn't make fresh kills. He was a grave robber. Kind of like an archetypal Ygor only a dozen points crazier. But in the same chapter the author discusses a few exhibits of preserved humans, other than mummies, in which the skin is missing. The most celebrated is

Body Worlds, which I have been to see when it showed in Philadelphia a few years ago. You can't call it taxi

dermy, as "derm" means "skin." Maybe the ones that just show veins and arteries outlining an entire body are "taxiveny". Many of the rest of the plastinated bodies show musculature. I found it eerily fascinating. Once was enough. It seems we have an inborn, powerful aversion to making human taxidermy.

What of the impulse to preserve an animal's figure in this way? Given that fewer than a third of us have the impulse to collect (making collecting, as Madden writes, deviant behavior), why collect mounted skins? Is it to honor the animal, or to connect with our own animal nature? As with other endeavors, the reasons are as diverse as height or the depth of one's voice. I like natural history museums, though I spend most of my time there with the rocks, some with the shells, some with bones, and almost none with the mounted "specimens". I am not comfortable in a home "decorated" with mounts. A mounted fish or moose head in a restaurant doesn't put me off my feed, but doesn't interest me, either. I suppose there are those whose favorite dining room is filled with mounts. For this subject, more than most, "to each his own" is full of meaning.

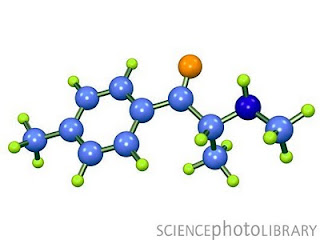

This is one of the "bath salts" molecules. Strange that such a small molecule can have such profound effects. Of course, one of the smallest molecules, HCN, or cyanide, is fatal in very small amounts.

This is one of the "bath salts" molecules. Strange that such a small molecule can have such profound effects. Of course, one of the smallest molecules, HCN, or cyanide, is fatal in very small amounts. Then there is this stuff. Propofol, the molecule that killed Michael Jackson. In yesterday's court debate, the defense claimed that the doctor was weaning the singer off the drug. Strangely, he had just ordered another three gallons of it!

Then there is this stuff. Propofol, the molecule that killed Michael Jackson. In yesterday's court debate, the defense claimed that the doctor was weaning the singer off the drug. Strangely, he had just ordered another three gallons of it!